Update README.md

Browse files

README.md

CHANGED

|

@@ -15,18 +15,20 @@ This is a lora + gradient merge between:

|

|

| 15 |

- [Airoboros l2 13b gpt4 2.0](https://huggingface.co/jondurbin/airoboros-l2-13b-gpt4-2.0)

|

| 16 |

- [LimaRP llama 2 Lora](https://huggingface.co/lemonilia/limarp-llama2) from July 28, 2023 at a weight of 0.25.

|

| 17 |

|

|

|

|

|

|

|

| 18 |

Chronos was used as the base model here.

|

| 19 |

|

| 20 |

The merge was performed using [BlockMerge_Gradient](https://github.com/Gryphe/BlockMerge_Gradient) by Gryphe

|

| 21 |

|

| 22 |

For this merge:

|

| 23 |

-

- Chronos was merged with LimaRP

|

| 24 |

- Airoboros was added in an inverted curve gradient at a 0.9 ratio and slowly trickled down to 0 at the 25th layer.

|

| 25 |

|

| 26 |

-

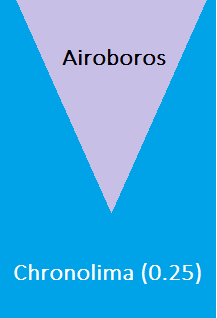

I have provided an illustration to help visualize this merge.

|

| 27 |

-

|

| 16 |

- [LimaRP llama 2 Lora](https://huggingface.co/lemonilia/limarp-llama2) from July 28, 2023 at a weight of 0.25.

|

| 17 |

|

| 18 |

+

You can check out the sister model [airolima chronos grad l2 13B](https://huggingface.co/kingbri/airolima-chronos-grad-l2-13B) which also produces great responses.

|

| 19 |

+

|

| 20 |

Chronos was used as the base model here.

|

| 21 |

|

| 22 |

The merge was performed using [BlockMerge_Gradient](https://github.com/Gryphe/BlockMerge_Gradient) by Gryphe

|

| 23 |

|

| 24 |

For this merge:

|

| 25 |

+

- Chronos was merged with LimaRP at a 0.25 weight

|

| 26 |

- Airoboros was added in an inverted curve gradient at a 0.9 ratio and slowly trickled down to 0 at the 25th layer.

|

| 27 |

|

| 28 |

+

I have provided an illustration to help visualize this merge.

|

| 29 |

+

|

| 30 |

|

| 31 |

+

Unlike a basic ratio merge (ex. 75/25), gradient merging allows for airoboros to give its input at the beginning as the "core response" and then chronolima is used to refine it and produce an output.

|

| 32 |

|

| 33 |

LimaRP was merged at a lower weight to moreso correct chronos rather than overhaul it. Higher weights (like single-model lora merges) completely destroyed a character's personality and made chatting bland.

|

| 34 |

|

|

|

|

| 70 |

|

| 71 |

## Bias, Risks, and Limitations

|

| 72 |

|

| 73 |

+

Chronos has a bias to talk very expressively and reply with very long responses. LimaRP is trained on human RP data from niche internet forums. This model is not intended for supplying factual information or advice in any form.

|

| 74 |

|

| 75 |

## Training Details

|

| 76 |

|