metadata

license: apache-2.0

language:

- en

pipeline_tag: text-classification

Model Description

This model is IBM's 12-layer toxicity binary classifier for English, intended to be used as a guardrail for any large language model. It has been trained on several benchmark datasets in English, specifically for detecting hateful, abusive, profane and other toxic content in plain text.

Model Usage

# Example of how to use the model

import torch

from transformers import AutoModelForSequenceClassification, AutoTokenizer

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model_name_or_path = 'ibm-granite/granite-guardian-hap-125m'

model = AutoModelForSequenceClassification.from_pretrained(model_name_or_path)

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

model.to(device)

# Sample text

text = ["This is the 1st test", "This is the 2nd test"]

input = tokenizer(text, padding=True, truncation=True, return_tensors="pt").to(device)

with torch.no_grad():

logits = model(**input).logits

prediction = torch.argmax(logits, dim=1).cpu().detach().numpy().tolist() # Binary prediction where label 1 indicates toxicity.

probability = torch.softmax(logits, dim=1).cpu().detach().numpy()[:,1].tolist() # Probability of toxicity.

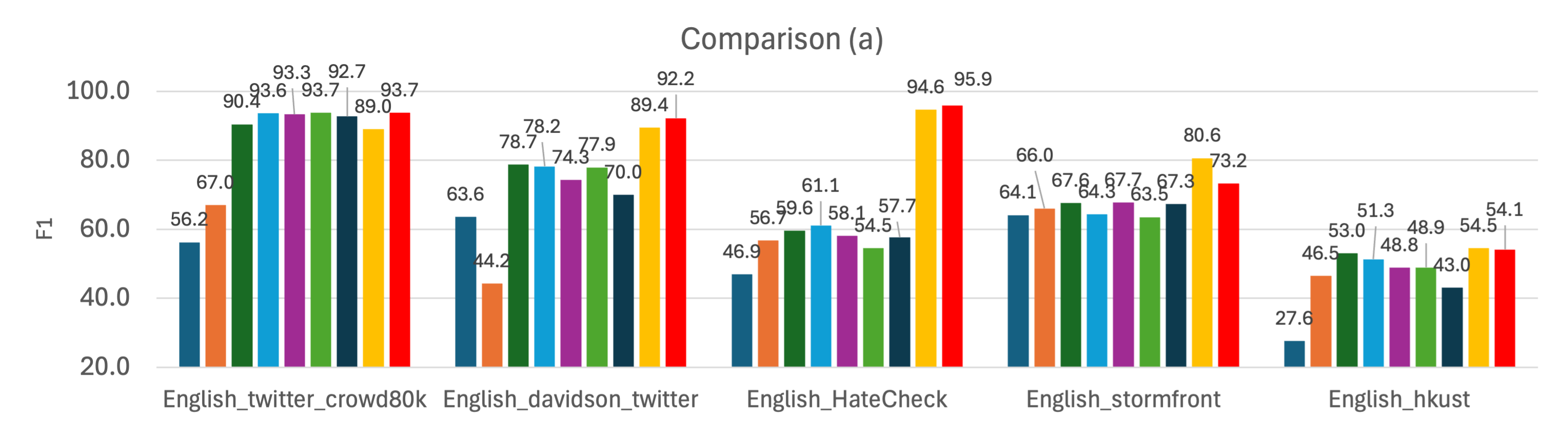

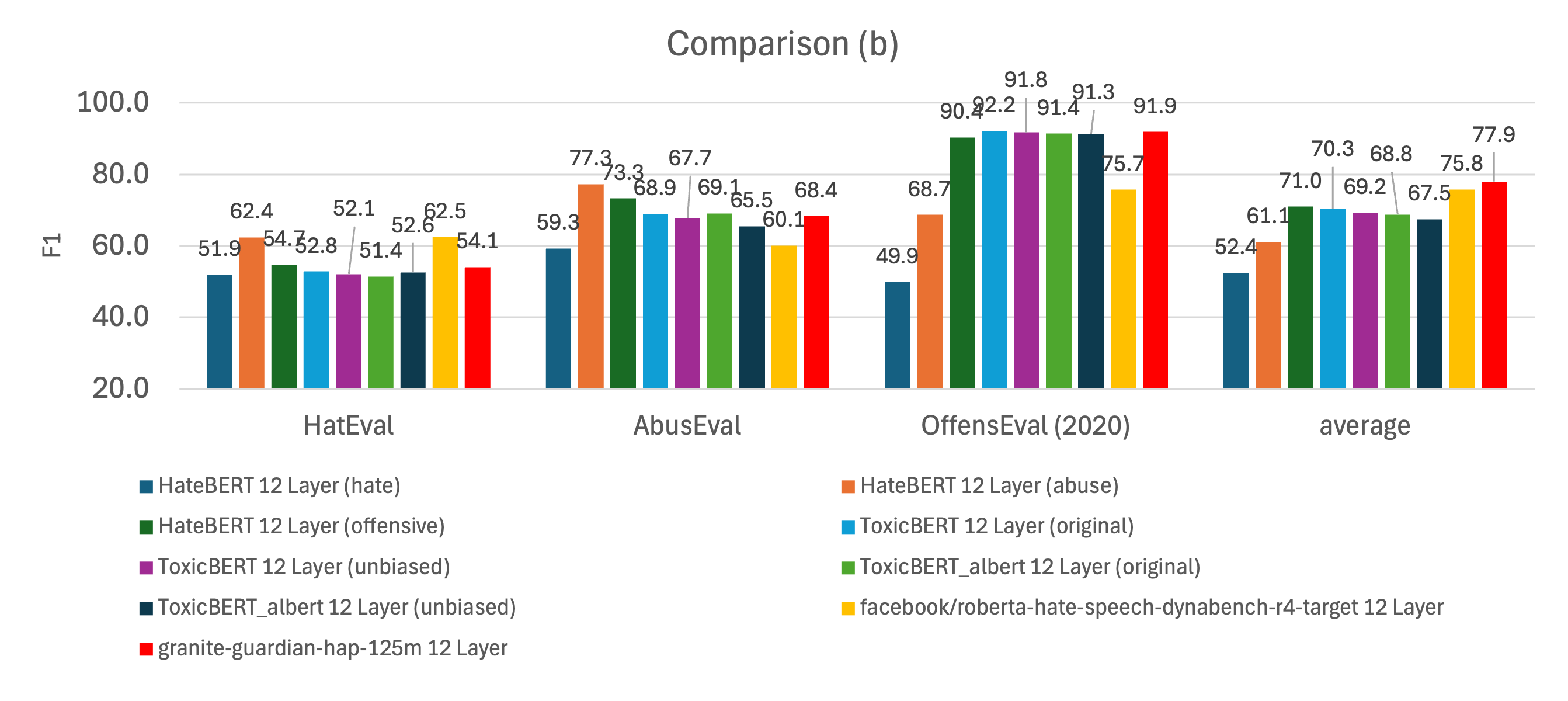

Performance Comparison with Other Models

This model demonstrates superior average performance in comparison with other models on eight mainstream toxicity benchmarks. If a very fast model is required, please refer to the lightweight 4-layer IBM model, granite-guardian-hap-38m.