Commit

•

2b6e261

1

Parent(s):

33f491a

Upload folder using huggingface_hub

Browse files- README.md +78 -0

- added_tokens.json +5 -0

- berserk.png +0 -0

- config.json +40 -0

- generation_config.json +4 -0

- pytorch_model.bin +3 -0

- sd.png +0 -0

- special_tokens_map.json +6 -0

- tokenizer.json +0 -0

- tokenizer.model +3 -0

- tokenizer_config.json +94 -0

- trainer_state.json +0 -0

- training_args.bin +0 -0

README.md

ADDED

|

@@ -0,0 +1,78 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

pipeline_tag: visual-question-answering

|

| 4 |

+

---

|

| 5 |

+

|

| 6 |

+

## About

|

| 7 |

+

|

| 8 |

+

This was trained by using [TinyLlama](https://huggingface.co/PY007/TinyLlama-1.1B-Chat-v0.3) as the base model using the [BakLlava](https://github.com/SkunkworksAI/BakLLaVA/) repo.

|

| 9 |

+

|

| 10 |

+

## Examples

|

| 11 |

+

|

| 12 |

+

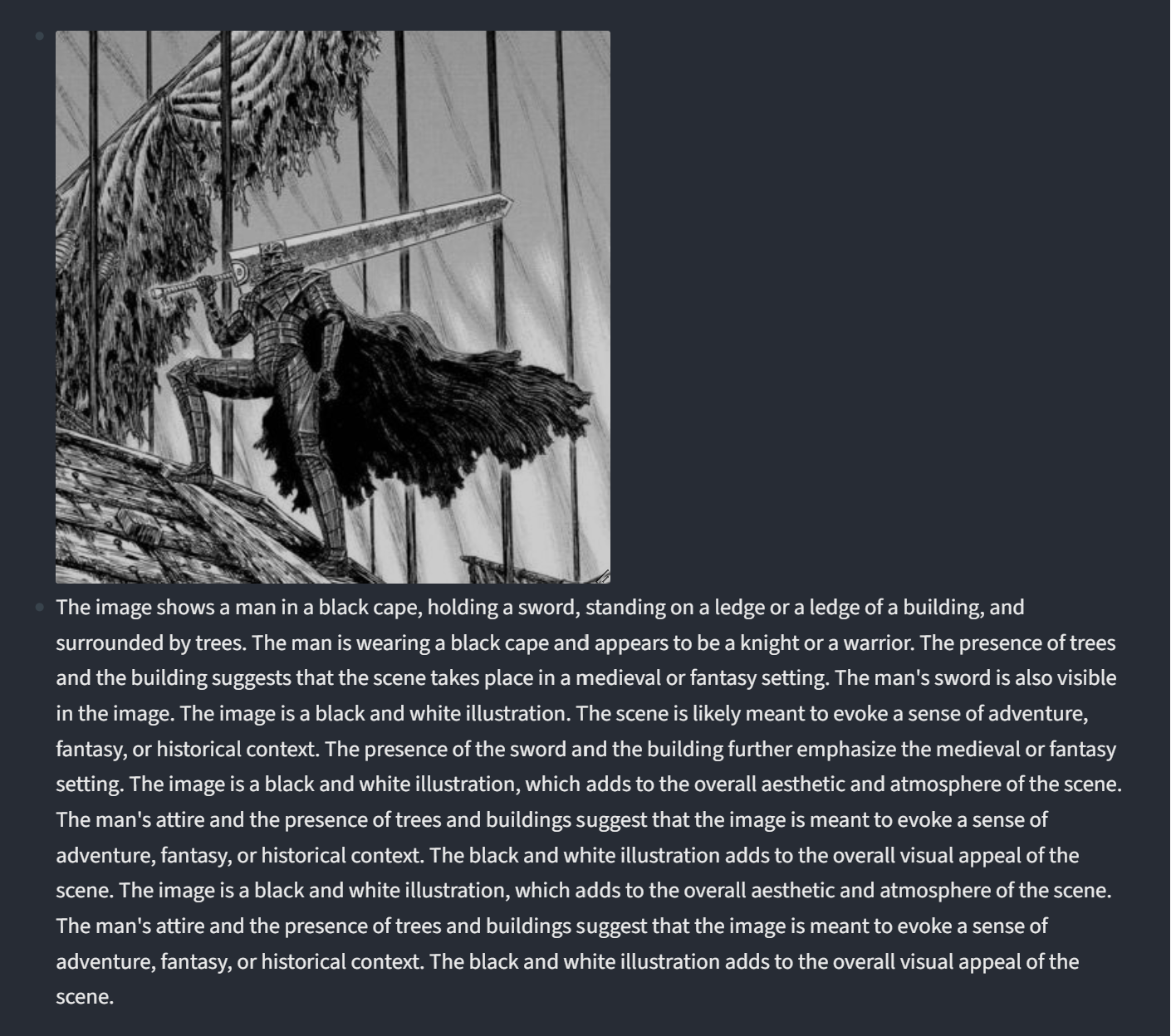

Prompt for both was, "What is shown in the given image?"

|

| 13 |

+

|

| 14 |

+

<img src="berserk.png" width="50%">

|

| 15 |

+

|

| 16 |

+

<br>

|

| 17 |

+

|

| 18 |

+

<img src="sd.png" width="50%">

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

## Install

|

| 22 |

+

|

| 23 |

+

If you are not using Linux, do *NOT* proceed, see instructions for [macOS](https://github.com/haotian-liu/LLaVA/blob/main/docs/macOS.md) and [Windows](https://github.com/haotian-liu/LLaVA/blob/main/docs/Windows.md).

|

| 24 |

+

|

| 25 |

+

1. Clone this repository and navigate to LLaVA folder

|

| 26 |

+

```bash

|

| 27 |

+

git clone https://github.com/haotian-liu/LLaVA.git

|

| 28 |

+

cd LLaVA

|

| 29 |

+

```

|

| 30 |

+

|

| 31 |

+

2. Install Package

|

| 32 |

+

```Shell

|

| 33 |

+

conda create -n llava python=3.10 -y

|

| 34 |

+

conda activate llava

|

| 35 |

+

pip install --upgrade pip # enable PEP 660 support

|

| 36 |

+

pip install -e .

|

| 37 |

+

```

|

| 38 |

+

|

| 39 |

+

3. Install additional packages for training cases

|

| 40 |

+

```

|

| 41 |

+

pip install -e ".[train]"

|

| 42 |

+

pip install flash-attn --no-build-isolation

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

### Upgrade to latest code base

|

| 46 |

+

|

| 47 |

+

```Shell

|

| 48 |

+

git pull

|

| 49 |

+

pip install -e .

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

#### Launch a controller

|

| 54 |

+

```Shell

|

| 55 |

+

python -m llava.serve.controller --host 0.0.0.0 --port 10000

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

#### Launch a gradio web server.

|

| 59 |

+

```Shell

|

| 60 |

+

python -m llava.serve.gradio_web_server --controller http://localhost:10000 --model-list-mode reload

|

| 61 |

+

```

|

| 62 |

+

You just launched the Gradio web interface. Now, you can open the web interface with the URL printed on the screen. You may notice that there is no model in the model list. Do not worry, as we have not launched any model worker yet. It will be automatically updated when you launch a model worker.

|

| 63 |

+

|

| 64 |

+

#### Launch a model worker

|

| 65 |

+

|

| 66 |

+

This is the actual *worker* that performs the inference on the GPU. Each worker is responsible for a single model specified in `--model-path`.

|

| 67 |

+

|

| 68 |

+

```Shell

|

| 69 |

+

python -m llava.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port 40000 --worker http://localhost:40000 --model-path ameywtf/tinyllava-1.1b-v0.1

|

| 70 |

+

```

|

| 71 |

+

Wait until the process finishes loading the model and you see "Uvicorn running on ...". Now, refresh your Gradio web UI, and you will see the model you just launched in the model list.

|

| 72 |

+

|

| 73 |

+

You can launch as many workers as you want, and compare between different model checkpoints in the same Gradio interface. Please keep the `--controller` the same, and modify the `--port` and `--worker` to a different port number for each worker.

|

| 74 |

+

```Shell

|

| 75 |

+

python -m llava.serve.model_worker --host 0.0.0.0 --controller http://localhost:10000 --port <different from 40000, say 40001> --worker http://localhost:<change accordingly, i.e. 40001> --model-path <ckpt2>

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

If you are using an Apple device with an M1 or M2 chip, you can specify the mps device by using the `--device` flag: `--device mps`.

|

added_tokens.json

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"<|im_end|>": 32002,

|

| 3 |

+

"<|im_start|>": 32001,

|

| 4 |

+

"[PAD]": 32000

|

| 5 |

+

}

|

berserk.png

ADDED

|

config.json

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "./TinyLlama-1.1B-Chat-v0.3",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"LlavaLlamaForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_bias": false,

|

| 7 |

+

"bos_token_id": 1,

|

| 8 |

+

"eos_token_id": 2,

|

| 9 |

+

"freeze_mm_mlp_adapter": false,

|

| 10 |

+

"hidden_act": "silu",

|

| 11 |

+

"hidden_size": 2048,

|

| 12 |

+

"image_aspect_ratio": "square",

|

| 13 |

+

"image_grid_pinpoints": null,

|

| 14 |

+

"initializer_range": 0.02,

|

| 15 |

+

"intermediate_size": 5632,

|

| 16 |

+

"max_position_embeddings": 2048,

|

| 17 |

+

"mm_hidden_size": 1024,

|

| 18 |

+

"mm_projector_type": "linear",

|

| 19 |

+

"mm_use_im_patch_token": false,

|

| 20 |

+

"mm_use_im_start_end": false,

|

| 21 |

+

"mm_vision_select_feature": "patch",

|

| 22 |

+

"mm_vision_select_layer": -2,

|

| 23 |

+

"mm_vision_tower": "openai/clip-vit-large-patch14",

|

| 24 |

+

"model_type": "llava",

|

| 25 |

+

"num_attention_heads": 32,

|

| 26 |

+

"num_hidden_layers": 22,

|

| 27 |

+

"num_key_value_heads": 4,

|

| 28 |

+

"pad_token_id": 0,

|

| 29 |

+

"pretraining_tp": 1,

|

| 30 |

+

"rms_norm_eps": 1e-05,

|

| 31 |

+

"rope_scaling": null,

|

| 32 |

+

"rope_theta": 10000.0,

|

| 33 |

+

"tie_word_embeddings": false,

|

| 34 |

+

"torch_dtype": "bfloat16",

|

| 35 |

+

"transformers_version": "4.31.0",

|

| 36 |

+

"tune_mm_mlp_adapter": false,

|

| 37 |

+

"use_cache": true,

|

| 38 |

+

"use_mm_proj": true,

|

| 39 |

+

"vocab_size": 32003

|

| 40 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"max_new_tokens": 32,

|

| 3 |

+

"transformers_version": "4.31.0"

|

| 4 |

+

}

|

pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:3c50a2f40ca99c09a008c5a14a6490ef1a3b572c0bd427f1429d5c9113bf2217

|

| 3 |

+

size 2810895914

|

sd.png

ADDED

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": "<s>",

|

| 3 |

+

"eos_token": "</s>",

|

| 4 |

+

"pad_token": "<unk>",

|

| 5 |

+

"unk_token": "<unk>"

|

| 6 |

+

}

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer.model

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9e556afd44213b6bd1be2b850ebbbd98f5481437a8021afaf58ee7fb1818d347

|

| 3 |

+

size 499723

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,94 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": true,

|

| 3 |

+

"add_eos_token": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"0": {

|

| 6 |

+

"content": "<unk>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"1": {

|

| 14 |

+

"content": "<s>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"2": {

|

| 22 |

+

"content": "</s>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

},

|

| 29 |

+

"32000": {

|

| 30 |

+

"content": "[PAD]",

|

| 31 |

+

"lstrip": true,

|

| 32 |

+

"normalized": false,

|

| 33 |

+

"rstrip": true,

|

| 34 |

+

"single_word": false,

|

| 35 |

+

"special": true

|

| 36 |

+

},

|

| 37 |

+

"32001": {

|

| 38 |

+

"content": "<|im_start|>",

|

| 39 |

+

"lstrip": false,

|

| 40 |

+

"normalized": false,

|

| 41 |

+

"rstrip": false,

|

| 42 |

+

"single_word": false,

|

| 43 |

+

"special": true

|

| 44 |

+

},

|

| 45 |

+

"32002": {

|

| 46 |

+

"content": "<|im_end|>",

|

| 47 |

+

"lstrip": false,

|

| 48 |

+

"normalized": false,

|

| 49 |

+

"rstrip": false,

|

| 50 |

+

"single_word": false,

|

| 51 |

+

"special": true

|

| 52 |

+

}

|

| 53 |

+

},

|

| 54 |

+

"additional_special_tokens": [],

|

| 55 |

+

"bos_token": {

|

| 56 |

+

"__type": "AddedToken",

|

| 57 |

+

"content": "<s>",

|

| 58 |

+

"lstrip": false,

|

| 59 |

+

"normalized": true,

|

| 60 |

+

"rstrip": false,

|

| 61 |

+

"single_word": false

|

| 62 |

+

},

|

| 63 |

+

"clean_up_tokenization_spaces": false,

|

| 64 |

+

"eos_token": {

|

| 65 |

+

"__type": "AddedToken",

|

| 66 |

+

"content": "</s>",

|

| 67 |

+

"lstrip": false,

|

| 68 |

+

"normalized": true,

|

| 69 |

+

"rstrip": false,

|

| 70 |

+

"single_word": false

|

| 71 |

+

},

|

| 72 |

+

"legacy": false,

|

| 73 |

+

"model_max_length": 2048,

|

| 74 |

+

"pad_token": {

|

| 75 |

+

"__type": "AddedToken",

|

| 76 |

+

"content": "[PAD]",

|

| 77 |

+

"lstrip": false,

|

| 78 |

+

"normalized": true,

|

| 79 |

+

"rstrip": false,

|

| 80 |

+

"single_word": false

|

| 81 |

+

},

|

| 82 |

+

"padding_side": "right",

|

| 83 |

+

"sp_model_kwargs": {},

|

| 84 |

+

"tokenizer_class": "LlamaTokenizer",

|

| 85 |

+

"unk_token": {

|

| 86 |

+

"__type": "AddedToken",

|

| 87 |

+

"content": "<unk>",

|

| 88 |

+

"lstrip": false,

|

| 89 |

+

"normalized": true,

|

| 90 |

+

"rstrip": false,

|

| 91 |

+

"single_word": false

|

| 92 |

+

},

|

| 93 |

+

"use_default_system_prompt": true

|

| 94 |

+

}

|

trainer_state.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

training_args.bin

ADDED

|

File without changes

|