GFPGAN (CVPR 2021)

Paper | Project Page English | 简体中文

GitHub: https://github.com/TencentARC/GFPGAN

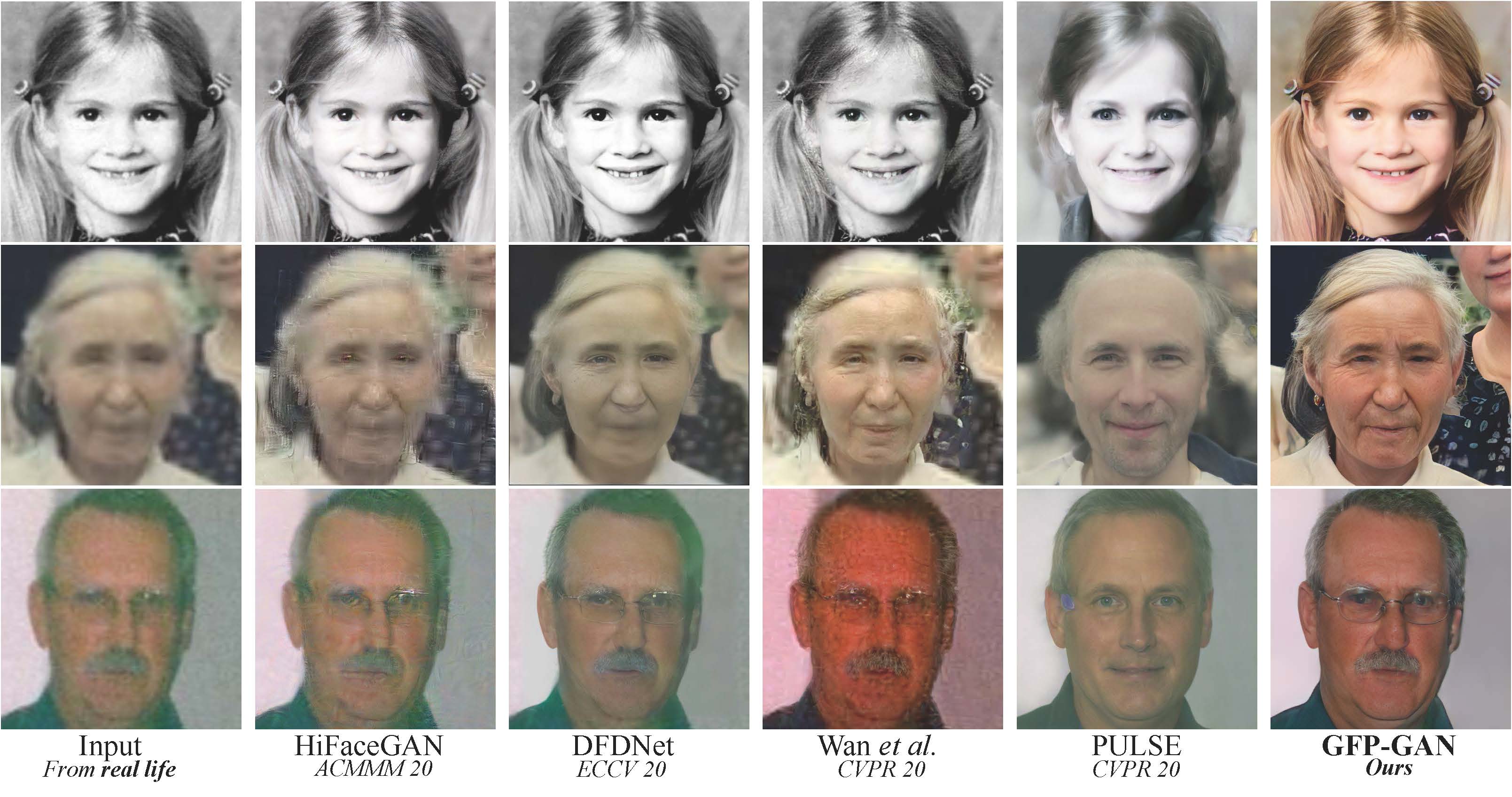

GFPGAN is a blind face restoration algorithm towards real-world face images.

:book: GFP-GAN: Towards Real-World Blind Face Restoration with Generative Facial Prior

[Paper] [Project Page] [Demo]

Xintao Wang, Yu Li, Honglun Zhang, Ying Shan

Applied Research Center (ARC), Tencent PCG

Abstract

Blind face restoration usually relies on facial priors, such as facial geometry prior or reference prior, to restore realistic and faithful details. However, very low-quality inputs cannot offer accurate geometric prior while high-quality references are inaccessible, limiting the applicability in real-world scenarios. In this work, we propose GFP-GAN that leverages rich and diverse priors encapsulated in a pretrained face GAN for blind face restoration. This Generative Facial Prior (GFP) is incorporated into the face restoration process via novel channel-split spatial feature transform layers, which allow our method to achieve a good balance of realness and fidelity. Thanks to the powerful generative facial prior and delicate designs, our GFP-GAN could jointly restore facial details and enhance colors with just a single forward pass, while GAN inversion methods require expensive image-specific optimization at inference. Extensive experiments show that our method achieves superior performance to prior art on both synthetic and real-world datasets.

BibTeX

@InProceedings{wang2021gfpgan,

author = {Xintao Wang and Yu Li and Honglun Zhang and Ying Shan},

title = {Towards Real-World Blind Face Restoration with Generative Facial Prior},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2021}

}

:wrench: Dependencies and Installation

- Python >= 3.7 (Recommend to use Anaconda or Miniconda)

- PyTorch >= 1.7

- NVIDIA GPU + CUDA

Installation

Clone repo

git clone https://github.com/xinntao/GFPGAN.git cd GFPGANInstall dependent packages

# Install basicsr - https://github.com/xinntao/BasicSR # We use BasicSR for both training and inference # Set BASICSR_EXT=True to compile the cuda extensions in the BasicSR - It may take several minutes to compile, please be patient BASICSR_EXT=True pip install basicsr # Install facexlib - https://github.com/xinntao/facexlib # We use face detection and face restoration helper in the facexlib package pip install facexlib pip install -r requirements.txt

:zap: Quick Inference

Download pre-trained models: GFPGANv1.pth

wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_models

python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs

# for aligned images

python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/cropped_faces --aligned

:computer: Training

We provide complete training codes for GFPGAN.

You could improve it according to your own needs.

Dataset preparation: FFHQ

Download pre-trained models and other data. Put them in the

experiments/pretrained_modelsfolder.Modify the configuration file

train_gfpgan_v1.ymlaccordingly.Training

python -m torch.distributed.launch --nproc_per_node=4 --master_port=22021 train.py -opt train_gfpgan_v1.yml --launcher pytorch

:scroll: License and Acknowledgement

GFPGAN is realeased under Apache License Version 2.0.

:e-mail: Contact

If you have any question, please email xintao.wang@outlook.com or xintaowang@tencent.com.